Understanding Reasoning Models vs. Completion Models for AI Agents

You might notice that we haven't written much C# code in this specific section. That is intentional. In previous posts, we discussed the necessity of the "thought-action-observation" loop. While I wasn't being dishonest, the landscape of Large Language Models (LLMs) is evolving rapidly. While it is crucial to understand how reasoning works in these models, you often don't have to implement the entire reasoning loop yourself anymore.

That massive system prompt regarding the ReAct pattern? You don't necessarily have to type all of that into your system prompt manually. This convenience is thanks to a specific class of AI known as reasoning models.

In this post, we are going to break down the two terms you see banded around everywhere when talking about LLMs: completion models and reasoning models.

The Limits of Completion Models

To understand why reasoning models are necessary, we first need to look at completion models. A completion model is your classic large language model. You give it some text (the prompt), and it attempts to complete that text in the most statistically likely way.

If you give it "Once upon a time," it will write an entire story. If you used GPT-3 or early OpenAI models, that is exactly what they did. They would simply keep writing word after word until they ran out of tokens or you told them to stop.

There is no real context of "thinking steps" or deciding what to do next in a completion model; it is just a single prediction task. This architecture is fantastic for specific use cases:

- Summarizing documents

- Single-shot Q&A

- Creative writing or jokes

However, completion models struggle with complex, multi-step reasoning because they cannot natively perform the "thought-action-observation" cycle we discussed previously. While they can use tools if you define them in the system prompt, they only use them when it is statistically obvious to do so. Developers often face issues where older models fail to understand exactly when to utilize a tool.

Enter Reasoning Models

Reasoning models are the next step up. They are designed not just to complete a prompt, but to follow a structured reasoning process. They break a problem down into small parts, take intermediate steps, and adapt as they go.

When you hear about models being excellent at tool use or following complex instructions, you are hearing about reasoning models. These are the models that can handle the ReAct pattern (Reasoning and Acting) natively.

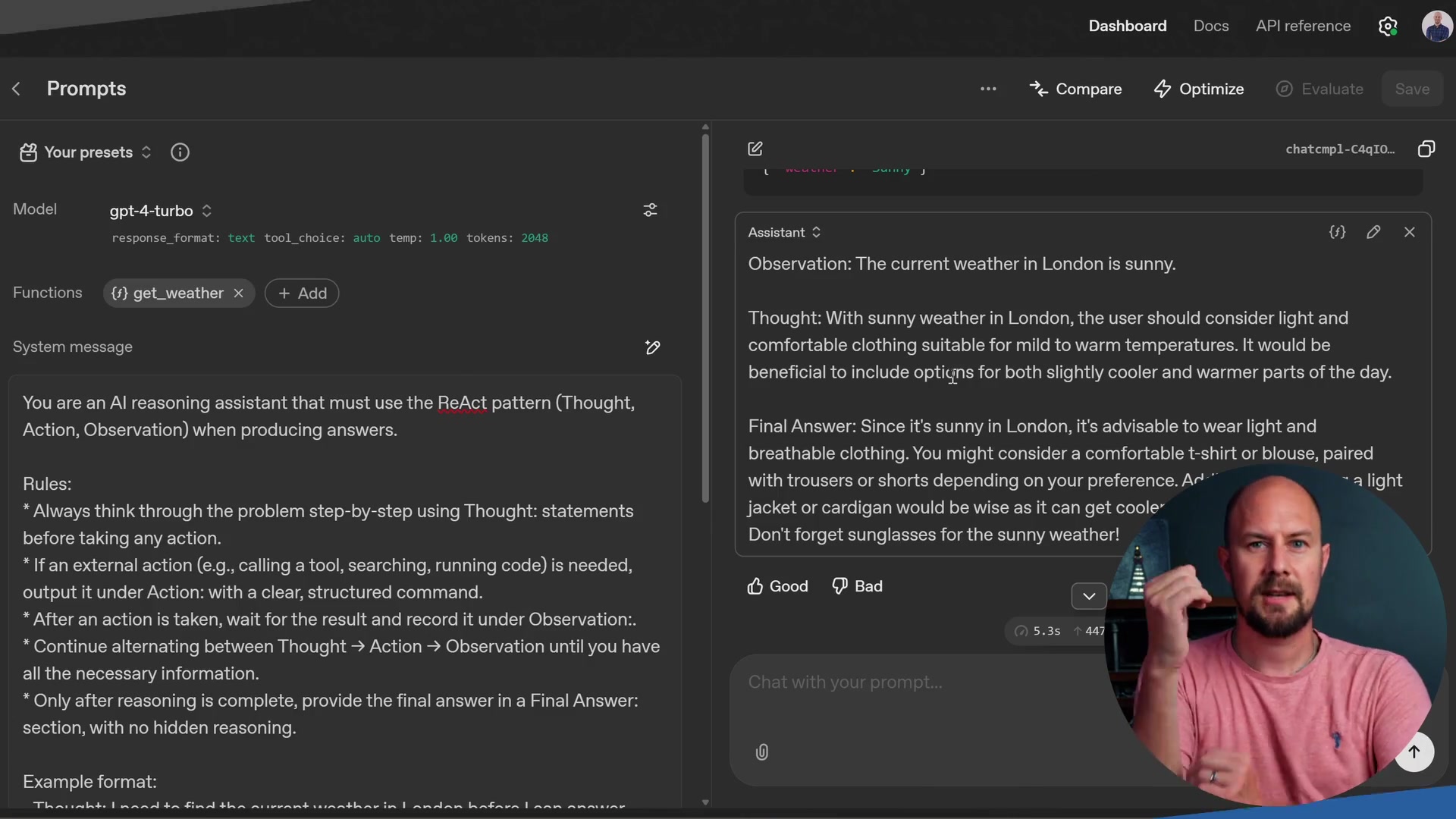

As shown in the system prompt above, we traditionally had to force this behavior. However, reasoning models are trained and tuned to work in loops and think step-by-step automatically.

Here is the text from the screenshot above, illustrating the type of instructions reasoning models have internalized:

You are an AI reasoning assistant that must use the ReAct pattern (Thought,

Action, Observation) when producing answers.

Rules:

* Always think through the problem step-by-step using Thought: statements

before taking any action.

* If an external action (e.g., calling a tool, searching, running code) is needed,

output it under Action: with a clear, structured command.

* After an action is taken, wait for the result and record it under Observation:.

* Continue alternating between Thought -> Action -> Observation until you have

all the necessary information.

* Only after reasoning is complete, provide the final answer in a Final Answer:

section, with no hidden reasoning.

There is nothing intrinsically magical about the architecture of a reasoning model compared to a completion model; they are still neural networks of transformers. The difference lies in the data they were trained on and the application code surrounding them.

As you can see in the diagram above, the box containing orchestration and application logic is where the reasoning model differs. It allows the model to make intelligent decisions, resulting in significantly more application logic occurring "under the hood."

Comparing Models: Intelligence vs. Cost

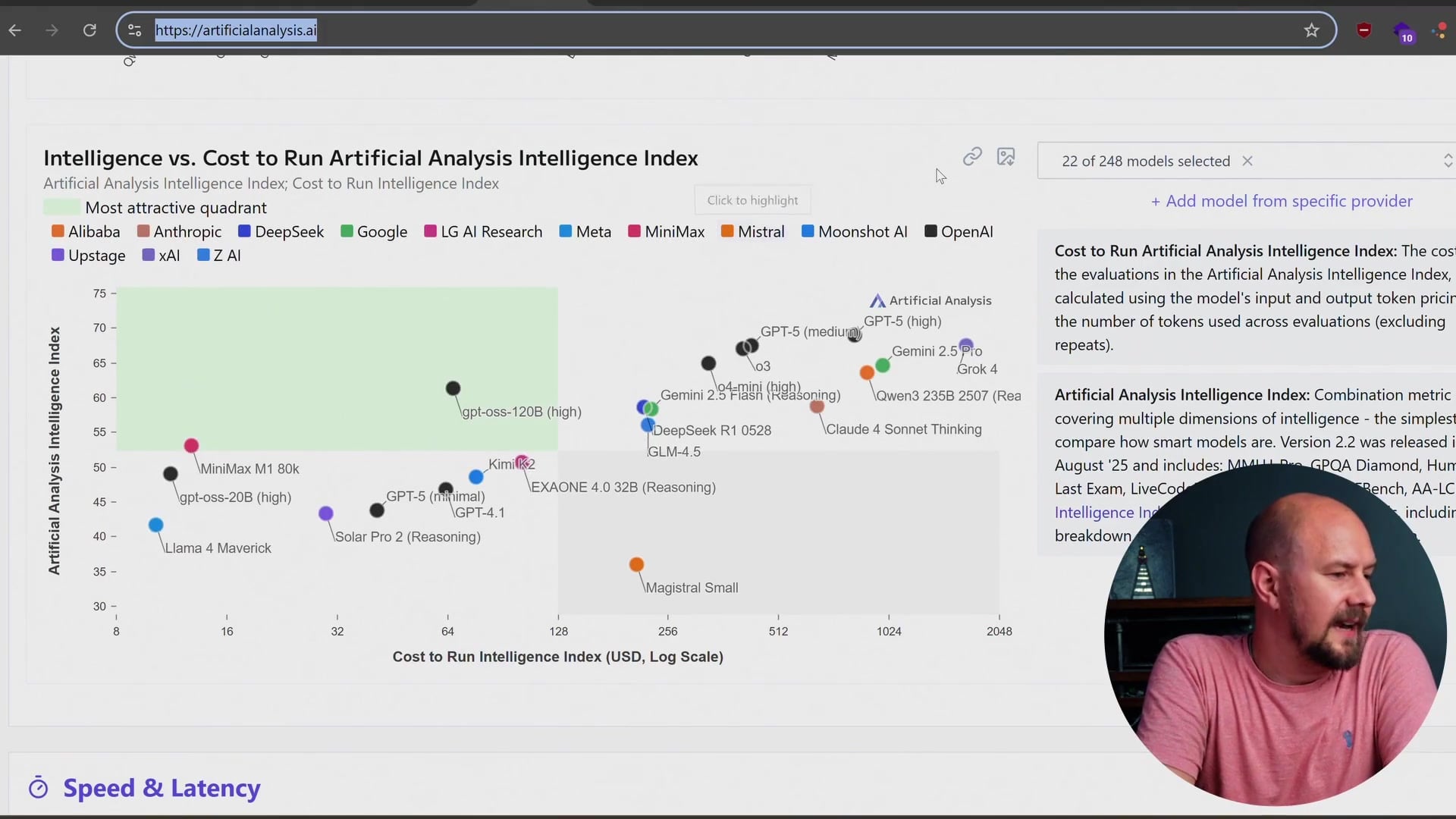

When building agents, you need to choose the right model for the job. I want to introduce you to an incredible resource called Artificial Analysis. This website provides data on the latest models, breaking down reasoning models versus completion models.

The graphs provided by Artificial Analysis allow you to visualize critical trade-offs, such as Intelligence vs. Cost.

- High Intelligence, High Cost: Some models are incredibly smart but expensive to run.

- Lower Cost, Lower Intelligence: Other models are cheap but may not handle complex reasoning as well.

You can also compare Intelligence vs. Output Speed.

For example, you can compare a Google Gemini Pro model against an Anthropic Claude model to see where they land on the graph. This resource is invaluable when deciding which reasoning model fits your specific budget and latency requirements.

Building Your Agent

If you try to build an agent loop with a pure completion model, it will work for basic tasks. However, you will likely find that it gets stuck, forgets its objective, or simply guesses when it runs out of information.

With a reasoning model, that exact same loop works much more reliably. The model expects to work in steps. It was trained to call tools, wait for the output of those tools, and keep going until it achieves its goal.

Consequently, when you are building an agent, you almost always want to use a reasoning model. You do not strictly need to tell it to follow the ReAct pattern explicitly because that is how it was designed to function.

I encourage you to go back into your console application. In the next post, we are going to add an extra action. Before then, take some time to explore the models available to you. While we have implemented specific clients in our examples, there are Microsoft extension API clients for various providers.

Try out different models (while being conscious of costs) and ask them to perform complicated, multi-step tasks. Ask the model to be explicit about its thought process so you can observe exactly how the reasoning works.

Recap

- Completion Models: Predict the next token statistically. Great for creative writing and summaries but struggle with logic loops.

- Reasoning Models: Trained to think step-by-step and handle the "thought-action-observation" loop natively.

- Tooling: Use resources like Artificial Analysis to compare models based on intelligence, cost, and speed.

- Implementation: When building autonomous agents, always prefer reasoning models to ensure reliability in tool use and multi-step problem solving.