Understanding LLM Context: Why Large Language Models Are Stateless

We have covered how an LLM can predict the next word in a string of text and how you can control that predictor by using a system prompt. Now, let's look at context windows and history.

One really important thing to remember about large language models is that they are stateless. To explain exactly what I mean by stateless, I am going to use a C# analogy.

The C# Analogy: OOP vs. Functional Programming

As you probably know, there are a couple of different patterns you can use when writing C# code. You can do object-oriented programming (OOP) where you create classes. Each instance of a class captures some kind of internal state, and you can modify that state with public methods and properties.

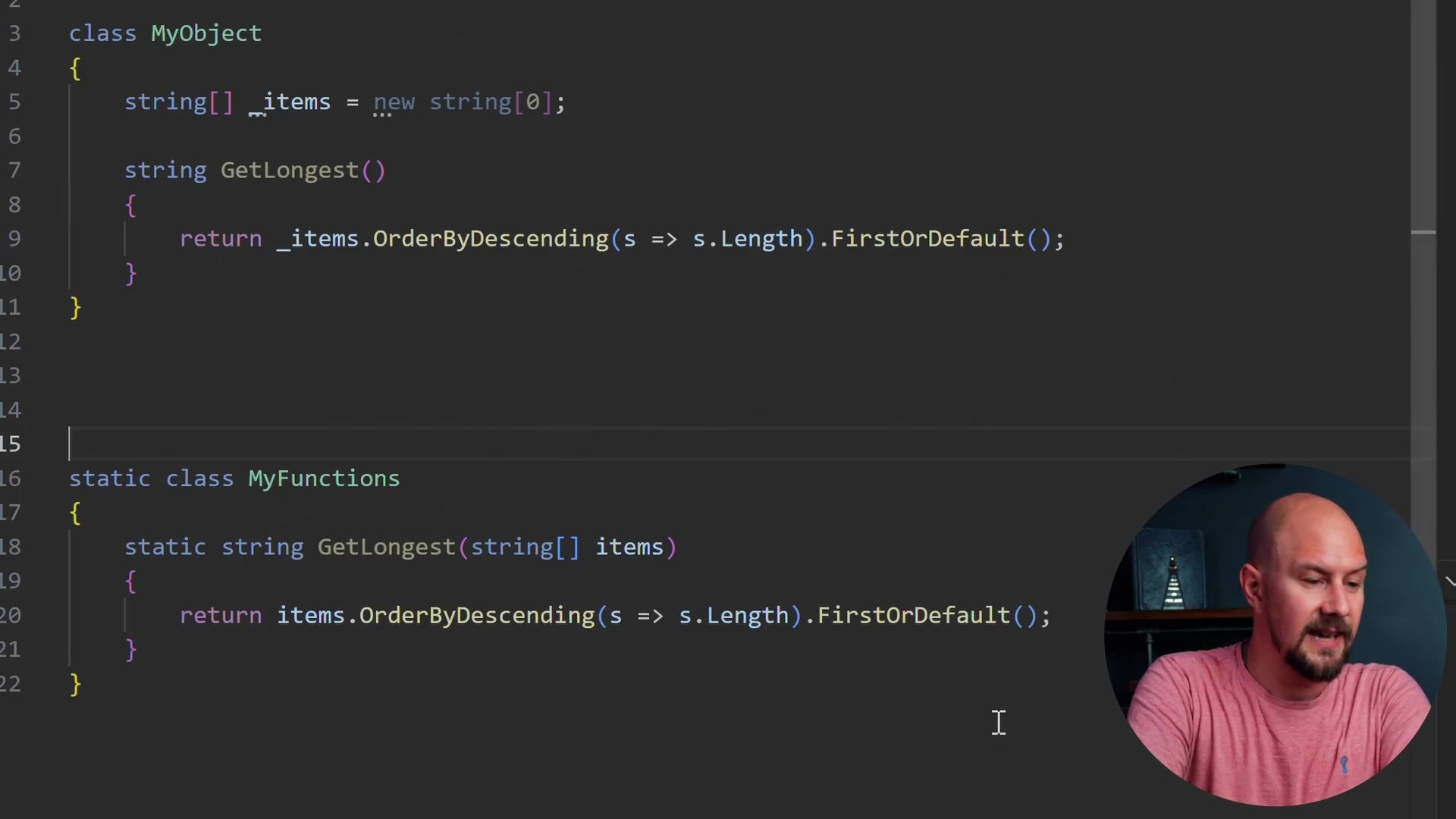

Here is an example of an object class. It has some internal state (the private field) and a function that returns something based on that internal state.

class MyObject

{

string[] _items = new string[0];

string GetLongest()

{

return _items.OrderByDescending(s => s.Length).FirstOrDefault();

}

}

Now, another pattern that is becoming increasingly popular, and one that C# has been embracing in recent years, is functional programming.

In functional programming, you do not have classes that capture and maintain their internal state. Everything in your code is done using pure functions. These are functions that take an input and produce an output, but they do not have any side effects and they do not need to reference external things. You do not rely on stored memory or private member variables.

In the example below, we are doing the same thing (getting the longest item from some strings), but because it is a pure function, we have to pass in that array.

static class MyFunctions

{

static string GetLongest(string[] items)

{

return items.OrderByDescending(s => s.Length).FirstOrDefault();

}

}

How This Applies to LLMs

Why am I telling you this? What does this have to do with LLMs?

LLMs work exactly like the functional programming example above. Everything the model needs to know must get passed into it in every single call because they are stateless.

In fact, it can be useful to think of a call to an LLM just like a function. Imagine replacing the GetLongest function name with CompleteTextUsingLLM.

static class MyFunctions

{

static string CompleteTextUsingLLM(string[] items)

{

return items.OrderByDescending(s => s.Length).FirstOrDefault();

}

}

In this analogy, the items array represents all of the messages that have come before. You have to send all of these messages every time you call the LLM in every single request.

This means everything the LLM needs to know, including your system prompts, your tool definitions (which we will cover later), and your entire conversation history, needs to get passed into the LLM with every single request. You essentially cannot have state that is managed inside the LLM.

Managing State for Agents

It is essentially a pure function that takes in a bunch of text (like JSON or chat responses) and produces a new piece of text coming out of it. Whenever we are working with LLMs, think of them in this functional programming model. Think of them as stateless and atomic operations that happen on a long piece of text in order to generate a new piece of text off the end of it.

We are not doing object-oriented programming with LLMs. We are doing functional programming.

When we come to build an agent later on, a lot of the work is actually going to be about managing, summarizing, and keeping control of this conversation history. A major part of what an agent does is manage that text so that when it makes LLM calls, it sends everything off in the most efficient way possible to give it the highest chance of success.

Recap

- LLMs are stateless: They do not remember previous interactions on their own.

- Pure Functions: Treat an LLM call like a pure function in programming where input determines output without side effects.

- Context is Key: You must pass the entire conversation history, system prompts, and tool definitions with every single request.

- Agent Responsibility: The "intelligence" of an agent often lies in how it manages this external state before sending it to the model.